Machines That Think Faster Than We Do

Can Humanity Keep Up?

January 26, 2025

If I told someone a century ago that machines would one day be capable of composing symphonies, diagnosing diseases, and predicting human behavior, they would likely have called it science fiction. Today, it’s a reality. While artificial intelligence is dazzling everyone with its capabilities, it’s also forcing us to confront a question that cuts to the core of what it means to be human: Will we be able to keep up with the machines we’ve created? The coming wave of AI isn’t just a technological revolution. It’s an existential one. And if we don’t confront it with urgency and clarity, the future could look very different from what we imagine or hope for.

An Uncomfortable Truth

Artificial intelligence isn’t just a tool; it’s a mirror. It reflects our greatest hopes — a cure for cancer, smarter cities, an end to mundane tasks — and our deepest fears. The problem is that AI is accelerating faster than our ability to guide it. Large language models now power applications that can write essays, code software, and create art at speeds unimaginable just a decade ago. But these systems are only as good as the data they’re trained on — data that often carries the biases, inequalities, and blind spots of the people who created it. The result is that we might be making an AI that can amplify existing injustices while moving too fast for us to hit pause.

A study on ethics and discrimination in artificial intelligence-enabled recruitment practices argues that algorithmic bias remains an issue. In contrast, AI recruitment tools offer benefits such as improved recruitment quality, cost reduction, and increased efficiency. It found that recruitment algorithms’ bias is evident in gender, race, color, and personality. Rest assured, this isn’t an isolated incident. Biases in AI are everywhere, from loan approvals to criminal sentencing algorithms. And as AI gets smarter, the consequences of these biases grow more profound.

The uncomfortable truth is that we are building machines that think faster than we do, but so far, we’re failing to ensure they think better than we do. I’m curious to see what role companies like Safe Superintelligence will play in creating a genuinely safe AI.

An Ethical Imperative

AI is not destiny but a choice. That choice hinges on one critical question: How do we align artificial intelligence with human values, especially when we can’t always agree on what those values should be?

Ethical AI requires more than good intentions. It demands robust frameworks, global cooperation, and accountability at every level. Let’s look at it from the lens of autonomous weapons: Should a machine have the power to decide who lives and dies in a conflict? The United Nations has been debating this question for years, but progress has been slow. Meanwhile, the technology is advancing. We should also consider AI’s role in shaping public opinion. Algorithms prioritize engagement, often at the expense of truth. We’ve seen how AI-driven social media platforms can spread misinformation faster than fact-checkers can keep up. The January 6th Capitol riots are a reminder of what happens when algorithms amplify division.

The stakes couldn’t be higher. Ensuring AI reflects diversity, fairness, and empathy isn’t just an ethical imperative but perhaps a survival strategy. The alternative is a world where the power of AI is concentrated in the hands of a few, deepening inequality and eroding trust in institutions.

Who Decides the Future?

AI doesn’t have an agenda (yet), but the people and organizations building it do. Right now, the answers to critical questions about AI’s purpose and direction are being shaped by a small group of tech giants, governments, and researchers. This concentration of power raises a fundamental issue: Who gets to decide the future? Will it be driven by the pursuit of profit, national security, or public good? While it should undoubtedly pursue a combination of these three pillars, our choices about AI today will echo for generations. If we’re not careful, we’ll end up with systems that reflect the priorities of a privileged few rather than the needs of the many. But there’s hope. Initiatives like the EU’s AI Act and the US Government’s AI Initiative are pushing for greater transparency and accountability, and OpenAI’s charter calls for ensuring that AGI (artificial general intelligence) benefits all of humanity (although I think of that one with a big question mark given OpenAI’s move towards a for-profit corporation). These efforts are a start, but they need global support and enforcement to make a real impact.

What can we do about it?

AI is not our enemy. It’s a tool. But, like any tool, its impact depends on how we put it to use. To address the concerns about the risk that AI poses, we must nurture global collaboration - AI doesn’t respect borders, and its governance shouldn’t either. We need international agreements that prioritize ethical development over technological arms races. We must encourage transparency and accountability - companies and governments developing AI must be transparent about how these systems work and who they serve. And we must empower the public - the conversation about AI cannot be limited to tech elites. We need to rapidly democratize the understanding of AI so that everyone feels involved in shaping its future or at least has an educated perspective.

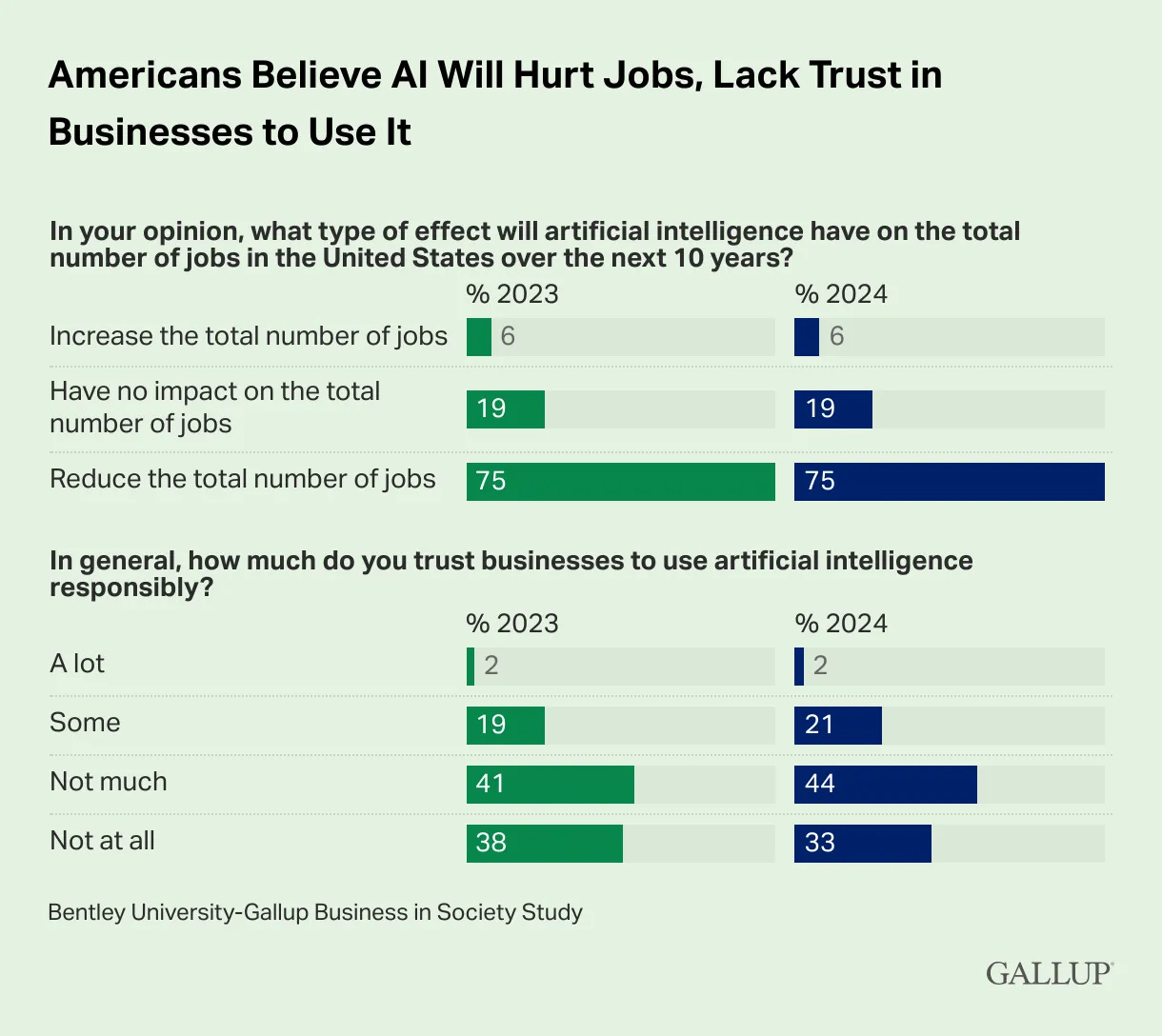

A recent survey shows that Americans express genuine concerns about AI, and few trust businesses to use it responsibly. That is precisely why transparency could help. Most importantly, we need to act with immediacy - the pace of AI development isn’t slowing down, and every moment of inaction makes it harder to course-correct.

Will we use this technology to amplify our best qualities—curiosity, creativity, and compassion—or will we let it magnify our worst instincts—greed, bias, and indifference? We must make the right choice for ourselves and future generations.

Thanks for reading,

Yon

An AI assistant was used to help research and edit this letter.